Ridge and Lasso Regression are two popular techniques used in regression analysis for predicting continuous variables. Both the techniques are useful in identifying the significant variables that affect the outcome. In this article, we will discuss the strengths and weaknesses of Ridge and Lasso Regression models and how to choose the best technique for solving your regression problem.

Ridge Regression Regression: Strengths and Weaknesses

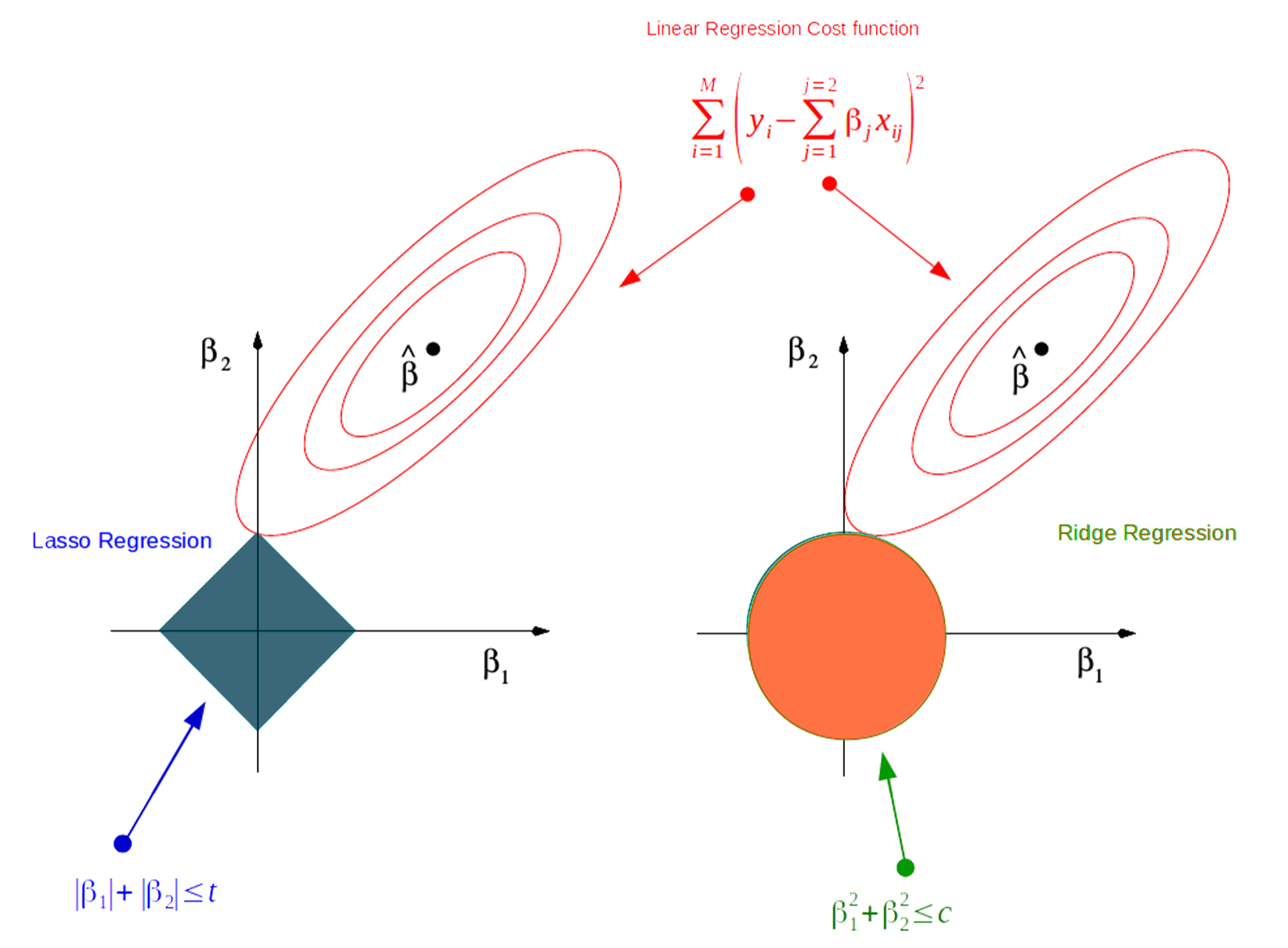

Ridge regression is a regularization technique used for analyzing data that suffers from multicollinearity. Multicollinearity occurs when independent variables in a regression model are highly correlated. Ridge regression adds a penalty term to the Ordinary Least Squares (OLS) method, called L2 norm, that shrinks the magnitude of the coefficients closer to zero for highly correlated variables.

Advantages of Ridge Regression:

– Reduces the variance of the model and prevents overfitting – Handles multicollinearity well – Produces reliable results even when the independent variables are high – Effects of small changes in data do not significantly affect the results

Disadvantages of Ridge Regression:

– Cannot perform automatic selection of variables – Does not perform well with a large number of independent variables – Lacks interpretability, i.e., it is difficult to interpret the relationship between the independent and dependent variables.

Lasso Regression: Strengths and Weaknesses

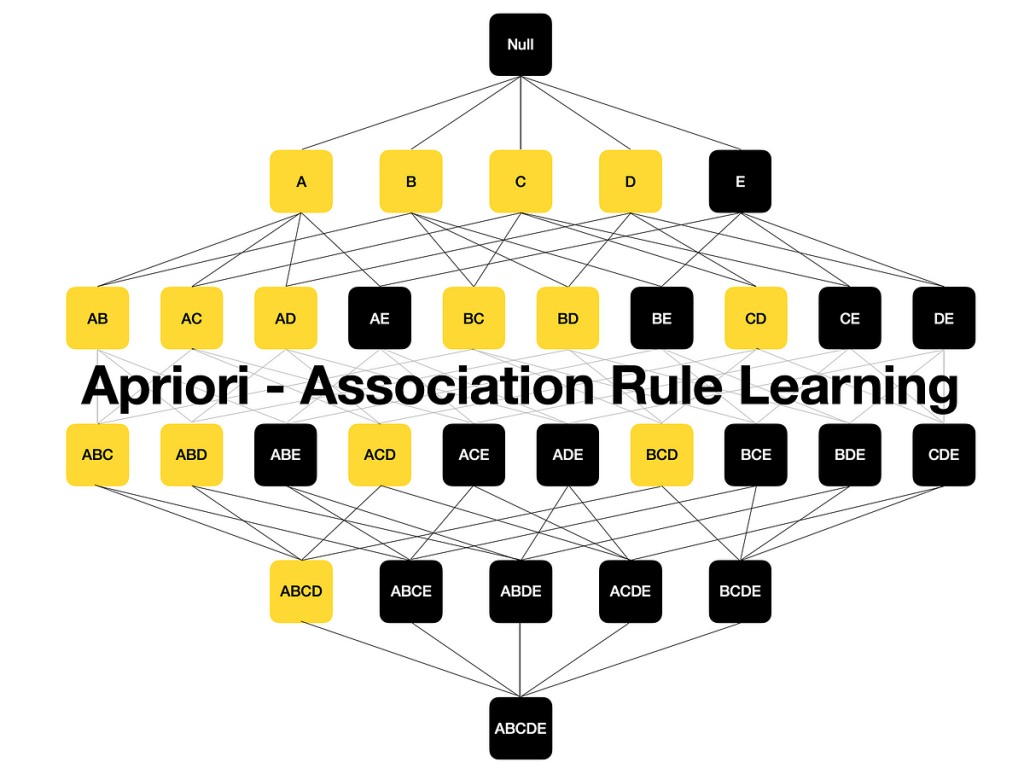

Lasso regression is also a regularization technique used in regression analysis to prevent overfitting. Lasso regression adds a penalty term to the OLS method, called L1 norm, that shrinks the magnitude of the coefficients closer to zero and eliminates the least important variables. Unlike Ridge Regression, Lasso Regression can perform automatic variable selection.

Advantages of Lasso Regression:

– Automatically selects important variables and eliminates the least important variables – Reduces the variance of the model and prevents overfitting – Provides a sparse solution when there are many irrelevant variables in the dataset

Disadvantages of Lasso Regression:

– Does not handle multicollinearity well – Results are unstable for small changes in data – performs poorly when the number of independent variables exceeds the number of observations.

Conclusion:

In conclusion, both Ridge Regression and Lasso Regression are powerful techniques used in regression analysis for continuous variables. Ridge Regression is best suited for datasets with multicollinearity while Lasso Regression is best suited for sparse datasets with many irrelevant variables. However, if interpretability is a priority, Ridge Regression is the best fit.

The reader is to use the strengths and weaknesses to decide which technique will produce the optimal results for their study.

Meta description for search engine

Ridge Regression vs Lasso Regression: Understand the strengths and weaknesses of Ridge and Lasso Regression models to choose the best fit for your regression problem.